Multimodality and General Artificial Intelligence – One Small Step for Humans, one Giant Step for Machine Intelligence?

Multimodal artificial intelligence has undoubtedly become one of the buzzwords of this year. Such development directions are usually accompanied by huge promises, such as that similar models represent a big step towards the ideal of Artificial General Intelligence (AGI). How likely are these promises to turn out to be true and, in general, why would task independence be such a huge step forward, and what does multimodality have to do with it?

Perhaps we should start from the fact that all existing AI models (even the latest advances in generative AI – GAI) are fundamentally task dependent. This means that such models will only be capable of doing one thing. Chatbots (even the most sophisticated ones), for instance, expect questions and provide the most likely answer based on their training data. Image generators might expect text input and their response is a matrix of pixel color values that the human eye processes as an image. What all these models have in common is that they cannot effectively do anything other than what they have been trained to do. Image generators, for example, typically do not contain a language model. Accordingly, the text, as instructed to them, is only one element of a mapping to which they assign the most likely image to be created.

Source: DALL E 3.

To create a device that can perform multiple tasks in parallel, we have two options. Either we can continuously train an existing one to do new tasks, or we can design the model so that it can do more than just one task by default. We will see that multimodal models fall into this latter category.

So, the question may arise: why not build a generic model and then train it successively for different tasks? The answer lies in the physical architecture behind today’s AI technologies. These models are built by transforming the received training data into some internal representations (typically multidimensional vectors). During learning, an abstraction is then performed over these features and the resulting information is stored as ‘knowledge’.

But what would happen if we wanted to train such a model for a new task? If we look at how people learn, the intuitive answer is that new information is added to what the model has already stored. But this is a human perspective and only that. In our case, for example, learning a new foreign language does not mean that we will forget our mother tongue. However, the world of machine learning works according to drastically different rules.

Although the neurobiological background of this process is still not fully understood, we know that synaptic plasticity plays a crucial role in learning in the human brain. When new information is stored or new skills are learned, the topology of the network of neurons in the human brain alters. New connections are created, and existing connections are strengthened. The key is that in such a case, it is not the whole network of neurons that is transformed, but only a subset of them.

Artificial neural models are basically structured similarly; the numeric values stored in artificial neurons and in their connections (generally called as parameters altogether) are responsible for storing information about a task. However, in the case of these kind of network, any training process affects the entire network. Regardless of whether it is an RNN, LSTM, or Transformer-based architecture, all the parameters in the model are updated during the learning of new tasks.

This has two important practical consequences. One is that adding either a new task or new training data to the model will very quickly lead to the model ‘discarding’ what it knew before. From this point on, it will only be able to generalize effectively over the information it currently knows. This phenomenon is known in the literature as ‘catastrophic forgetting’.

The other follows from the black box nature of similar networks. In the internal structure of today’s models, it is not known in most cases exactly which parameters are responsible for storing a specific information or a given type of generalization. In other words, even if the process of training the model were to be changed, it is not certain that we would be able to identify exactly which neurons or connections are important in relation to the already mastered task. And without this, we cannot effectively ensure that the learning of a new task does not come at the expense of the skills already acquired.

Although attempts have been made to identify relevant information (e.g., parameter importance ranking – PIR), these methods are still in their infancy.

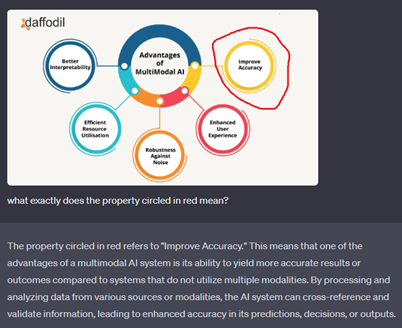

Another possible way to extend the capabilities of the models is to combine different data modalities (audio files, images, texts, etc.) during the initial training of the model. The essence of this method is to map traditionally separated data sources into a single representation (vector) space during model building. The advantage of this is that the resulting models will be able to match for instance textual prompts with the images to be generated. Another useful feature, which can be seen, for instance in the case of GPT-4v, OpenAI’s newly released model. This model can interpret queries that come in with user-uploaded images, since it has both a language model and the capabilities to process images.

This kind of data management can have many advantages. For example, in case of chatbots, different data modalities can support each other in formulating a question. The situation is a bit like when one person in a conversation asks who in the company they can turn to for help with a question. If one can only describe the person’s position, appearance, or perhaps give a name, then several extra steps are required on the part of the interviewer to identify the person. But if you are present and can simply point, the situation becomes much simpler.

More generally, the possibility of multimodal inputs can increase the accuracy of models by making it easier to clarify a context for the model.

The question is: can we consider this step as an important milestone on the road to AGI? Well, sort of…

Figure 1: Example of a multimodal model: GPT-4v’s answer to the question of what the detail highlighted by the user in the uploaded image means (uploaded image source).

There are attempts that directly promise that the developed multimodal model (Large Multimodal Model – LMM) will be a big step towards AGI. The main argument is usually that common sense is essentially based on multimodal foundations. Undoubtedly, people rely on several modalities simultaneously in their everyday lives, so they can organize information about the world effectively and develop a practical, everyday knowledge. An essential part of this is the processing of implicit information and the discovery of practically useful regularities. Without these observations, we cannot form a complete picture of the world around us indeed.

Soon, the main question will be to what extent can we really say that these LMMs are task-independent? The results so far mostly show that what happens here is the mapping of connections from different modalities, as well as the efficient processing of abstractions over these connections. The emergence of multimodal AI models is undoubtedly a huge leap, but they still need to be fine-tuned to be suitable for different tasks. In this respect, they are not significantly different from their predecessors. Current solutions mainly aim at combining visual and textual information, e.g., creating descriptions for images, text-based image generation, image-supported question-answering, possibly image-based or text-based search between data of opposite modality.

The big question of the future is whether multimodal models can, thanks to more diverse data sources, acquire capabilities that today we still only classify as human common sense. The development of human-like abstractions, as we know so far, is essential for the development of a hypothetic task-independent AI. However, based on the capabilities of current models, it is not yet clear how much closer multimodality, which is certainly part of AGI, will bring us to it.

István ÜVEGES is a researcher in Computer Linguistics at MONTANA Knowledge Management Ltd. and a researcher at the Centre for Social Sciences, Political and Legal Text Mining and Artificial Intelligence Laboratory (poltextLAB). His main interests include practical applications of Automation, Artificial Intelligence (Machine Learning), Legal Language (legalese) studies and the Plain Language Movement.